The Datalab CSN

Exploring collection spaces

Imagine wandering through the vast digital collections of Badisches Landesmuseum Karlsruhe and Allard Pierson Museum Amsterdam, each holding a treasure of objects waiting to be explored. The Collection Space Navigator (Ohm et al., 2023) serves as a virtual guide and offers a new way to engage with these collections. As part of the Creative User Empowerment project, the Datalab CSN becomes a bridge between the curators’ expertise and the rich visual data available to them.

Datalab CSN: https://datalab.landesmuseum.de/CSN/

Visual Similarity: An Alternative Approach

Navigating extensive cultural collections is challenging due to scale, rigid categorisation and limited support for serendipitous exploration. When it comes to image exploration, conventional methods of categorisation based on class labels may seem intuitive, but they impose restrictions on the fluid nature of visual content. A text-based analysis of images can miss many aspects of the visual data and ignore the complex interactions among different visual elements (Arnold & Tilton, 2023, p. 38).

An alternative approach is similarity-based navigation using interactive visualisations. Utilising image vectors provides an effective way to decode the complexity of visual relationships. An image vector is a numerical descriptor representing the visual features of an image. What makes it so valuable is its ability to group images based on their technical characteristics. This, in turn, allows human viewers to explore these image groups before attributing meanings to them.

For our Datalab CSN, we utilised a convolutional neural network (CNN) that acts as a visual connoisseur. In particular, we used a ResNet50 model (He et al., 2016), trained on the ImageNet dataset (Deng et al., 2009), encoding images into feature vectors. These vectors serve as digital fingerprints, resulting in a multidimensional space with 2048 dimensions.

To translate this multidimensional vector space into a comprehensible 2D visualisation, we applied a dimensionality reduction method called UMAP (short for Uniform Manifold Approximation and Projection) (McInnes et al., 2018). To ease the user experience and focus more on the collections, we opted for only one projection method for the Datalab CSN.

This map then serves as a visual interpretation of the multidimensional data in which images with visual similarities appear close to each other. Reducing hundreds of dimensions down to only two can never fully reflect the richness of the original data. While useful for visual examination and exploration, it is only an approximation of the underlying multidimensional space. Therefore, we should be careful not to over-interpret the result.

Using the Datalab CSN

The Collection Space Navigator (CSN) user interface allows you to explore the collection data by zooming in and out, moving around, filtering the museum objects by time or using advanced search queries to narrow down specific interests and aspects. The filtered data can be downloaded as metadata (CSV file) or spatial similarity map (PNG image) and is therefore available for further research. Clicking on an object reveals the corresponding collection data in the museum database.

With the sliders on the right-hand side under “Time Filter”, you can not only control the range of years to filter the objects based on their creation dates but also display the distribution within the subset collection in the form of interactive histograms. If you click on a histogram, you can glide through time by swiping over the bars and display all objects they entail.

The spatial navigation and filtering options allow users to curate their own journey through the collections and discover connections and patterns that might have remained unnoticed with traditional approaches to overviewing collection data. This approach makes it possible to make clusters and correlations visible, as well as to visualise existing “errors” in the metadata – and thus create the basis for improving the data basis.

For example, one of the internal issues that the Datalab CSN uncovered was the sometimes incorrect attribution of IDs to the image database of the Badisches Landesmuseum. A previous experiment to automatically match the image content from two different sources led to a few errors, now evident in the CSN. This makes it possible for all users to work with the collections and make suggestions or improvements.

We are looking forward to hearing about your ideas!

Explore the Collections

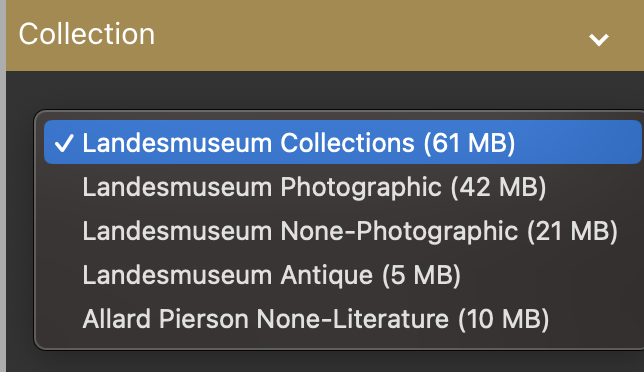

The “Landesmuseum Collections” dataset (61 MB) encompasses more than 27,000 objects. We divided this large selection further into smaller subsets to improve loading times and to highlight different collecting aspects. “Landesmuseum Photographic” (42 MB) lets you discover over 18,000 photographs within the Badisches Landesmuseum’s repertoire. "Landesmuseum None-Photographic" (21 MB) presents the reverse concept with 9,000 objects that are not photographic works. Special attention is paid to “Landesmuseum Antique (5 MB)” which reveals the almost 3,000 treasures dating from before the 15th century.

The Allard Pierson collection is presented without the vast amount of literature to highlight the visual objects in “Allard Pierson None-Literature” (10 MB) which comprises 4,000 museum objects.

Delving into the collections of Badisches Landesmuseum and Allard Pierson, the CSN becomes a versatile tool for researchers, curators and an interested audience. It not only enhances the exploration of individual subsets but also serves as a starting point for further research. The tool's ability to filter objects allows it to uncover relationships and trends that contribute to a richer understanding of the collection as a whole.

The Collection Space Navigator was developed by Tillmann Ohm and Mar Canet Solà and adapted within the framework of the Creative User Empowerment project in 2023 by Tillmann Ohm, Etienne Posthumus and Sonja Thiel.

Datalab CSN: https://datalab.landesmuseum.de/CSN/

Open Source code Datalab CSN: https://github.com/Badisches-Landesmuseum/CSN

Original Collection Space Navigator: https://collection-space-navigator.github.io/

Resources

Arnold, T., & Tilton, L. (2023). Distant Viewing: Computational Exploration of Digital Images. The MIT Press. https://doi.org/10.7551/mitpress/14046.001.0001

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., & Fei-Fei, L. (2009). ImageNet: A large-scale hierarchical image database. 2009 IEEE Conference on Computer Vision and Pattern Recognition. https://doi.org/10.1109/CVPR.2009.5206848

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep Residual Learning for Image Recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/CVPR.2016.90

McInnes, L., Healy, J., & Melville, J. (2018). UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction. http://arxiv.org/abs/1802.03426

Ohm, T., Canet Sola, M., Karjus, A., & Schich, M. (2023). Collection Space Navigator: An Interactive Visualization Interface for Multidimensional Datasets. Proceedings of the 16th International Symposium on Visual Information Communication and Interaction. https://doi.org/10.1145/3615522.3615546